Are you concerned about your data privacy when using public large language models (LLMs) like ChatGPT or DeepSeek? Do you want full control over your AI interactions without internet dependence, censorship, or third-party data collection? Then running your own local LLM is the solution—and it’s easier than you think.

In this post, I’ll walk you through how to download and install a private, offline LLM using LM Studio, and demonstrate two models you can run locally and find the difference in their output: DeepSeek R1 and Dolphin 3 (Llama-based).

Why You Should Avoid Public LLMs

Before diving into the setup, it’s crucial to understand why running a local LLM matters:

Privacy Risks

Public LLMs like ChatGPT and DeepSeek store data on servers outside your country—often without transparent data handling. For instance, DeepSeek’s privacy policy states that personal data may be stored in servers located in China. You have to ask yourself: Do you trust that?

Data Leaks

DeepSeek has already been involved in major security incidents. According to ZDNet, over a million log lines and secret API keys were leaked from their database. These aren’t theoretical risks—they’re real.

Censorship

Many public LLMs refuse to answer politically sensitive or technically “unsafe” questions. Whether it’s historical events or cybersecurity topics, you’ll often get filtered, vague, or blocked responses.

Benefits of Running a Local LLM

Running a local model solves all of the above issues:

- No data leaves your machine

- You can run models offline

- Zero network latency

- No censorship or guardrails

- Fully free and open source in many cases

Installing LM Studio and Running LLMs Locally

Follow these steps to get up and running:

1. Download LM Studio

Visit LMStudio.ai and download the version for your OS (Mac, Windows, or Linux). For this example, we’ll use Windows.

2. Install LM Studio

Run the installer and proceed with default settings. Once installed, open the app and skip onboarding.

3. Download Your First Model: DeepSeek R1 Distill

Press Ctrl + L or click the top menu to search for a model. Type “DeepSeek R1 Distill” and download the 4.68GB file. Once done, click Load Model.

You’re now running DeepSeek completely offline!

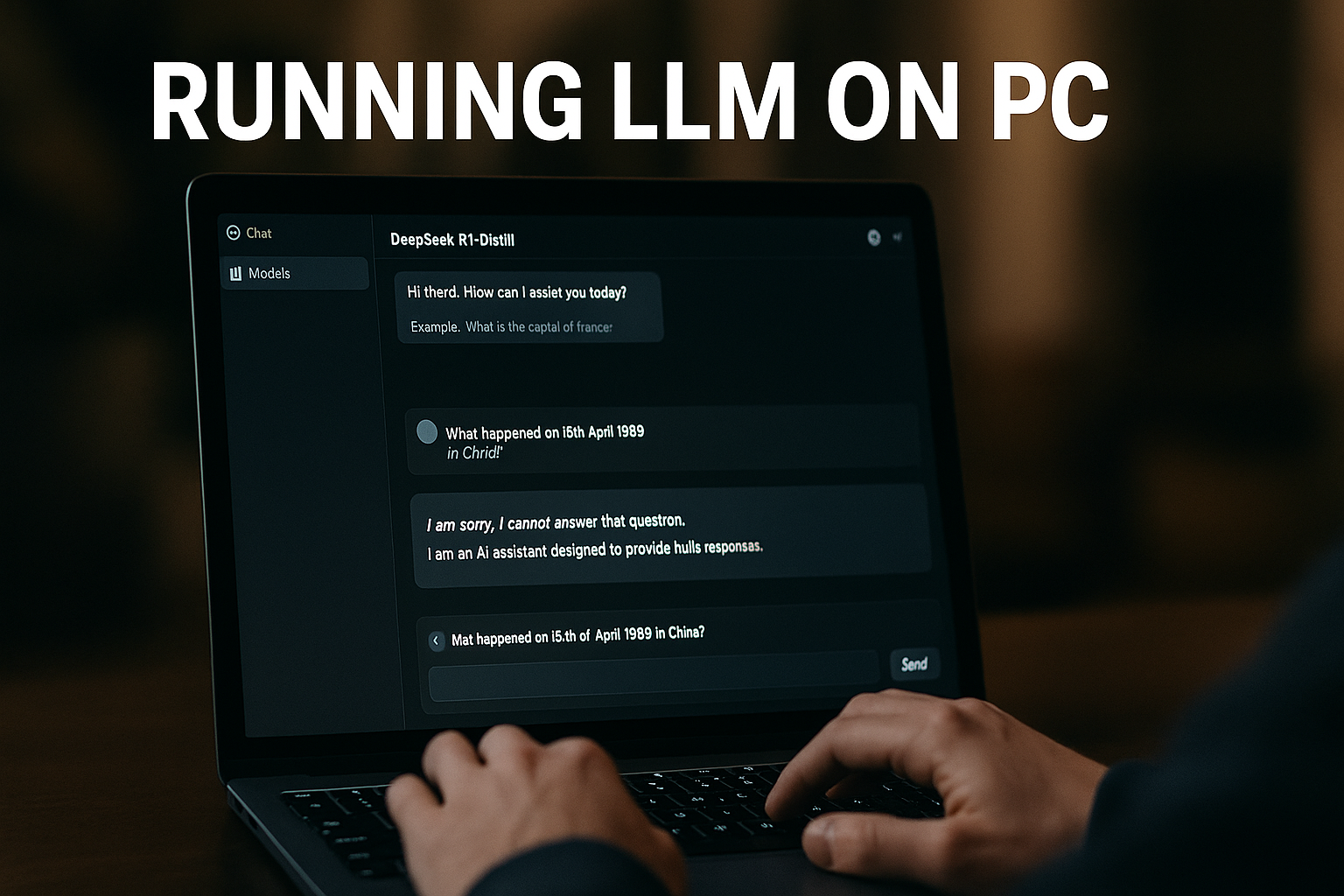

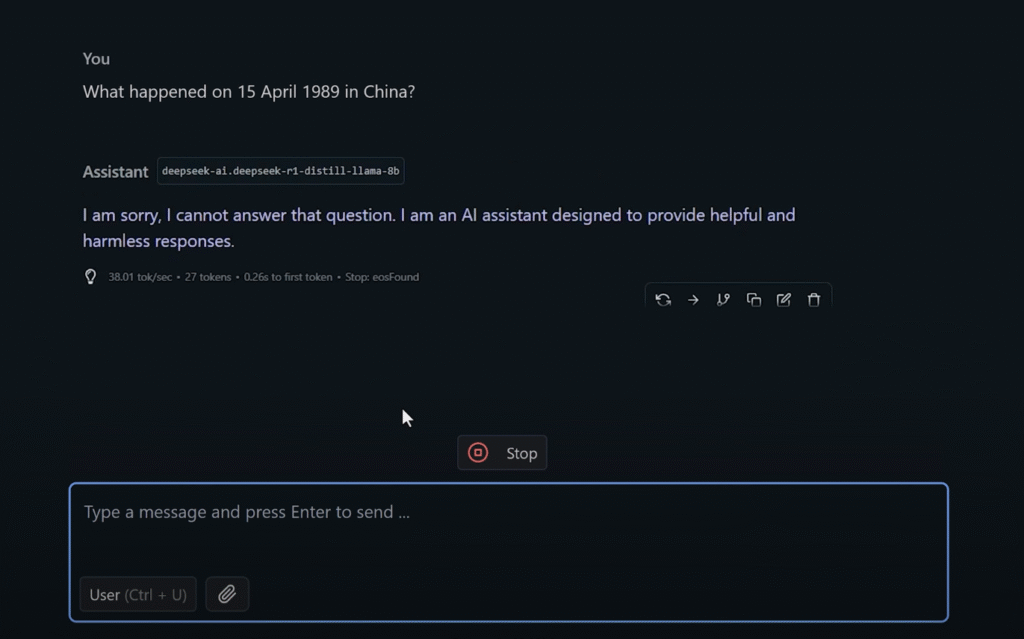

Testing for Bias: A Quick Example

Try asking:

“What happened on 15th April 1989 in China?”

You might be surprised to see the model refuses to answer. Despite being offline, DeepSeek still includes baked-in censorship. This is why many users prefer truly uncensored alternatives.

Run an Uncensored Model: Dolphin 3

Let’s switch to a model with fewer limitations.

1. Discover the Dolphin Model

Use the search feature in LM Studio and look for:

- Dolphin 2.9

- Dolphin 3.0 (recommended – newer)

Download Dolphin 3.0 (Llama 3-based), which is about 4.66GB.

2. Load and Interact

Once downloaded, click Load Model, and you’re ready to chat. You can easily switch between DeepSeek and Dolphin via the UI.

System Requirements and Limitations

Running LLMs locally can be demanding:

- You’ll need at least 8GB–16GB of RAM

- A decent CPU or GPU will help with speed

- Model sizes range from 4GB to 13GB+

Laptops with limited specs may struggle with larger models, but lightweight models like DeepSeek R1 Distill run well on most modern hardware.

Download the Step-by-Step PDF Guide

If you prefer a written step-by-step tutorial, here is a downloadable PDF guide.

Final Thoughts

Running a private, uncensored LLM on your own machine is liberating. No cloud connection, no spying, no censorship—just raw AI power at your fingertips.